NUS CS Course Reviews Part 6#

Published: December 15, 2025

My Year 4 Semester 1 has recently concluded. It has been a relatively relaxing semester without many commitments. In particular, I took CS5234 Algorithms at Scale and began officially working on an FYP. This blog will be my review on CS5234.

CS5234 Algorithms at Scale#

I took this in AY25/26 Sem 1 under Prof. Chen Yu. My final grade is A+.

Quiz (20%)

Homeworks (40%)

Project (40%)

This is a challenging yet very eye-opening course. There are over 100 students, majority of which are masters or PhDs. Through discussions with my friends, I realised that there is a decent amount of overlap between the materials of CS5234 and CS5330 Randomized Algorithms. However, the focus of CS5234 is fundamentally different. In CS5234, one mainly works with two models of computation that differ from the classical model in how the inputs are being read.

The first model of computation is the query model, in which one can randomly access (i.e. query) any symbol of the input at any time. We are interested in the query complexity, defined as the number of queries required to solve a given problem. The idea is that we work under a setting in which the input is so massive that we cannot afford to read every single symbol of the input. In other words, we are interested in algorithms with sublinear query complexity., i.e. sublinear algorithms. However, most interesting problems can never be solved exactly without full input access, as most of the time the bits that we didn’t read can be crucial in determining the correct answer.

This is when probability comes into play. We allow algorithms to be randomized, and we relax our requirements by allowing an algorithm to be correct as long as it is very likely to be very close to the correct answer. This intuitively opens doors to estimations of the correct answer by leveraging the power of probability and statistics. For example, say we are given a binary string of length \(n\) and we want to estimate the number of 1s in the string. With sublinear queries, the following sampling technique is natural: First, randomly sample \(k\) bits (the value of \(k\) is to be decided later). Then, count the number of 1s in the sample. Call this count \(X\). Finally, output \(\frac{n}{k}X\). It can be shown that if we want to be within \(\epsilon n\) additive error of the correct answer most of the time, it suffices to choose \(k = O(\frac{1}{\epsilon^2})\).

The second model of computation we investigate is the streaming model, in which the input is received in a stream. For every symbol of the input we receive, we will never be able to access the same symbol again unless we write it down. Contrary to the query model, we will eventually have full access to the input. However, we impose the handicap that we are only allowed sublinear space, so we cannot afford to memorize the entire input prior to making any computation. As usual, we are looking to estimate something about the input. For example, say we have received a stream of \(n\) numbers. Among these numbers, how many distinct numbers have we received?

Under the streaming model, there is an interesting algorithm design technique known as sketching. As the name suggests, one keeps a sketch of the input received. Again as the name suggests, this sketch is in general not an accurate representation of the actual input. One then makes an estimation based on this sketch. In our example above, a rough idea is to use a hash table to keep track of which numbers have been received, all while being careful that hash collisions do not happen too frequently so as to distort the actual number of distinct numbers by too large of a margin.

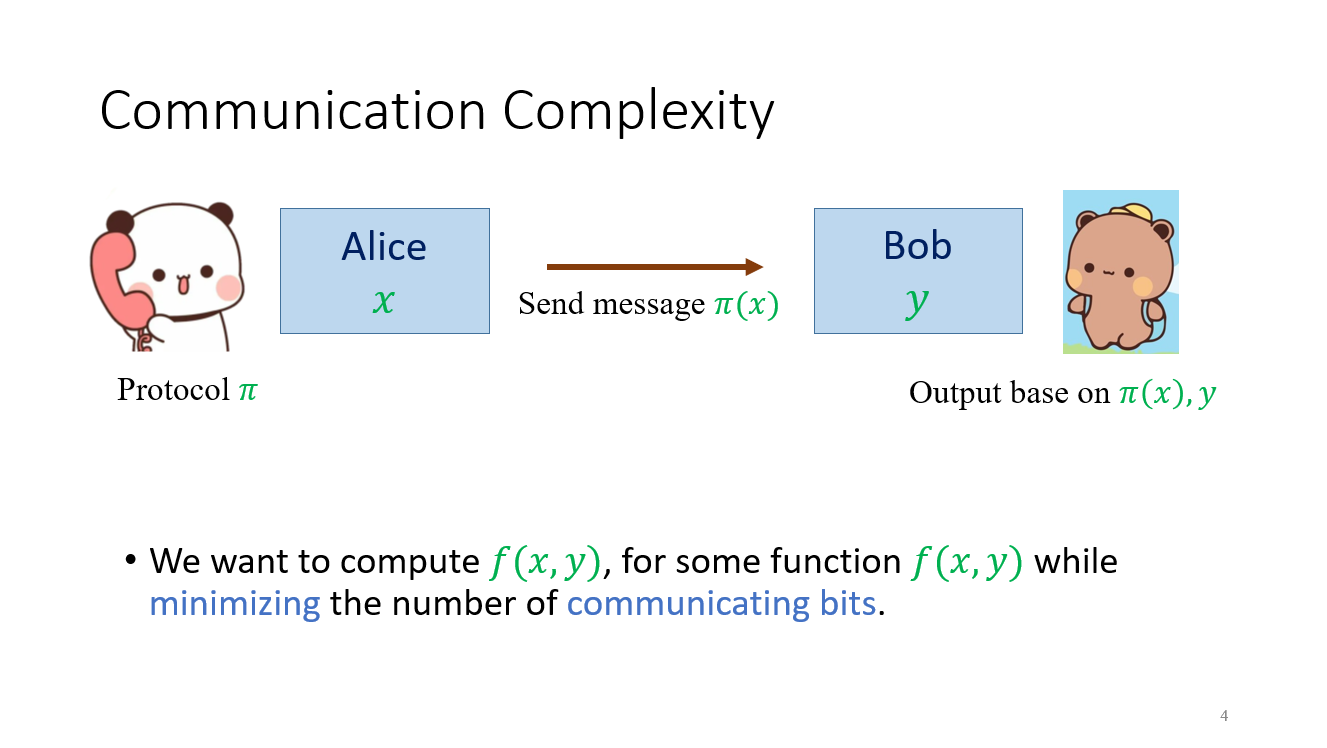

Apart from designing query and streaming algorithms, what I especially like about the course is that it also discusses algorithmic lower bounds. Under the query model, we introduce Yao’s Principle and show how it can be used to establish the inexistence of sublinear algorithms for certain problems. Under the streaming model, the story gets a little bit more interesting. We deviate to introduce the communication model and investigate the communication complexity of various problems. It can be shown that the communication model is, in some sense, more powerful than the streaming model, and so any lower bound for communication protocols also implies a lower bound for streaming algorithms. In turn, lower bounds in communication complexity can be obtained using a little bit of information theory. This came as a surprise for me as I just took CS3236 in the previous semester and didn’t really expect the materials to come into play this quickly into the future.

Prof. Yu’s slides introducing the communication model, which I find kind of cute.#

Content Difficulty: 9/10. Especially in the first half of the semester, it was pretty challenging for me to get used to the tones, notations, and techniques specific to the area of sublinear and streaming algorithms. Remember that in CS3230, we spent about two weeks learning about randomization? The thing about defining indicator random variables and applying Chebyshev’s inequality / Chernoff bounds? I don’t, because I wasn’t paying attention. It came back to haunt me, but thankfully I managed to push through and caught up with all of these before the midterm quiz. I attribute this success to the teaching team, but also to a decision I made in week 1 to make my own lecture notes. Every lecture note has been extremely time-consuming to make, and I eventually stopped after 5 lectures. The notes have, however, helped me tremendously, so for the benefit of people, I will eventually port these lecture notes into this website. Stay tuned for that :)

Moving into the second half of the semester, I kind of got used to the difficulty and have already established a good amount of foundation needed to survive through the rest of the course. The course gets relatively easier because we are mostly applying the theory of query and streaming algorithms to solve natural problems like clustering and a wide array of graph problems. Sometimes, we had to prove lower bounds via reductions. Having taken CS5230 and been working with reductions in my FYP, this is no big deal for me. In fact, in dealing with hardness of approximation under the sublinear setting, we even looked at some gap reductions which has indirectly helped with my FYP.

Workload: 9/10. 3 hour weekly lectures. Well, Prof. Yu rarely uses the entire three hours. Officially, the last hour is the tutorial slot, but there is no tutorial, and so it became a one-hour buffer time in case he couldn’t finish within two hours. The main workload comes from the four homeworks. There are two homeworks in each half of the semester, and each homework is due two weeks from its release. I spent an average of 16 hours (already excluding breaks) working on each homework. In the end, I left out one subquestion in Homework 3 and one question in Homework 4 unattempted. For myself specifically, I also spent a considerable amount of time making lectures notes for the first half of the semester. Lastly, there is a project component, but more on that below.

Profs/TAs: 9/10. The teaching team has been great and is especially responsive to emails. Prof. Yu has shown great enthusiasm towards the materials he is teaching. He has been very patient answering student’s questions both online and offline. He had two TAs, namely Yanyu and Mingyang. One of them can be seen regularly attending the lectures. Both have been dilligent with their grading and have given personalised feedback when I emailed them for clarifications. The grading of Homework 3, 4 as well as the project did appear kind of rushed, presumably due to the lack of time.

Assessment. There is a quiz in week 7 to ensure that we really do understand the essential fundamentals covered in the first half of the semester. Right before the quiz, I finished my 5th and final lecture note and went into the quiz with a less-than-one-day revision of lecture 6 on communication complexity. I wasn’t too worried because I am relatively comfortable with algorithmic lower bounds. The quiz went unexpectedly well for me.

There is a group project to be submitted by Sunday of week 13. I worked in a team of one, i.e. myself, to conduct a literature review of the Travelling Salesman Problem (TSP) under the query model. I chose this topic mainly because it sounds a bit funny to work on the same problem in both my FYP and this course project. However, in reality, there isn’t much overlap between the two projects. The CS5234 project focuses on the query model and the majority of my literature review turns out to be on the estimation of MST costs. Meanwhile, my FYP focuses on algorithmic lower bounds of TSP in the classical model, so it is all about reductions.

Working on the project alone gave me the luxury to delay everything until the very last minute… and beyond. One requirement of the project is that we choose one previous work to describe in detail. I spent three days reading a paper by Czumaj and Sohler, understood it to a pretty good extent and submitted my literature review late by one day. I am not particularly proud of the work I produced in the end, as it was very rushed, but I find it impressive nonetheless the limitations of human under pressure. To be fair, I have decided on my project topic relatively early and have spent a few weeks preparing the outline of the literature review. All I had to do was to read the said paper. It was an interesting read, to the point where I am not willing to give up and just submit some garbage to meet the deadline. Instead, I took the L in exchange for a more thorough understanding of the paper. I also don’t think my final grades will change under either decision.